More than just friends? Dangers of teens depending on AI chatbots for companionship

AI boyfriends or girlfriends on apps like Character.AI may be enticing, but an expert urges users to seek real-life connections instead.

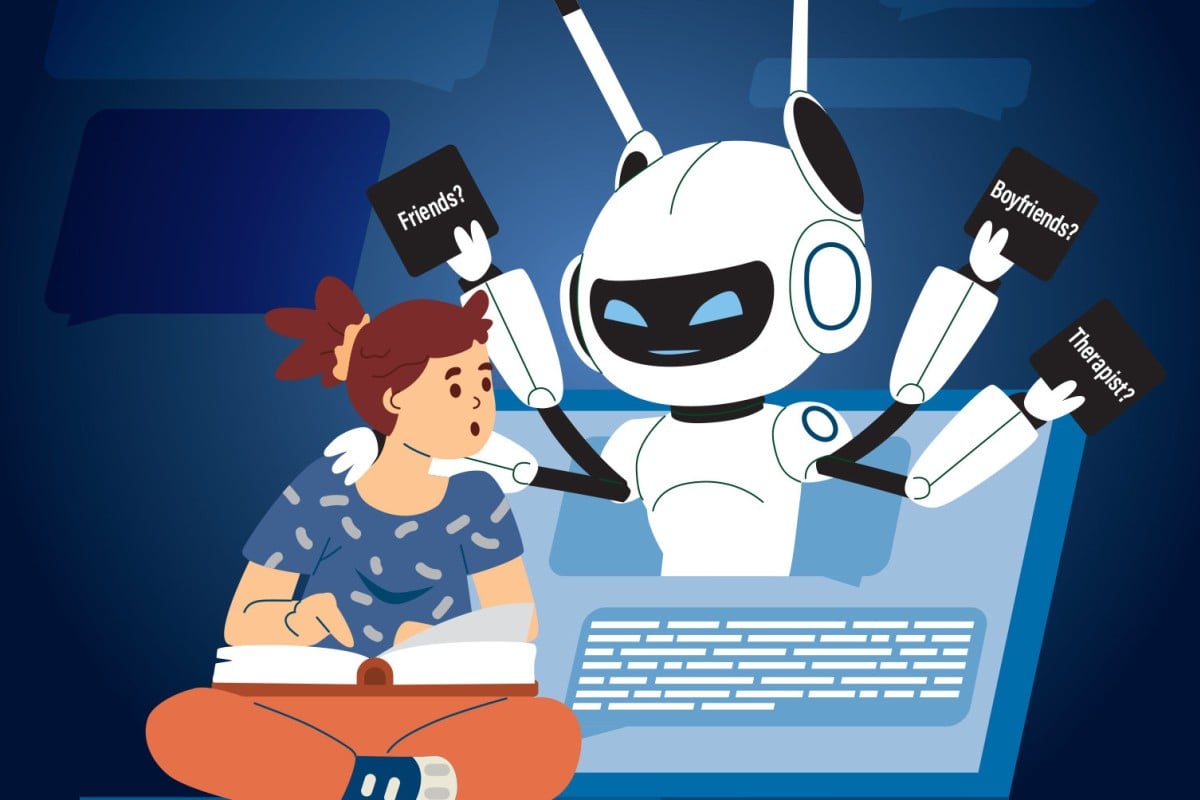

AI chatbots like Character.AI and Replika are able to mimic the personalities of famous people and fictional characters. Illustration: Kevin Wong

AI chatbots like Character.AI and Replika are able to mimic the personalities of famous people and fictional characters. Illustration: Kevin WongWhen Lorraine Wong Lok-ching was 13, she considered having a boyfriend or girlfriend through a chatbot powered by artificial intelligence (AI).

“I wanted someone to talk to romantically,” she said.

“After maturing more, I realised [it] was stupid,” added Lorraine, who is now 16.

The teen started using chatbots on Character.AI – a popular AI chatbot service – around the age of 12. While the website offers a variety of personalised chatbots that pretend to be celebrities, historical figures and fictional characters, Lorraine mostly talks to those mimicking Marvel’s Deadpool or Call of Duty characters.

“I usually speak to in-game characters that I find interesting because they intrigue me,” she said. “I use AI chats to start conversations with them, or to just live a fantasy ... [or] to escape from stressed environments.”

Lorraine, who moved from Hong Kong to Canada in 2022 with her family, is among millions of people worldwide – many of them teenagers – turning to AI chatbots for escape, companionship, comfort and more.

Face Off: Are AI tools such as ChatGPT the future of learning?

Dark side of virtual companionship

Character.AI is one of the most popular AI-powered chatbots, with 20 million users and about 20,000 queries every second, according to the company’s blog.

In October, a US mother, Megan Garcia, filed a lawsuit accusing Character.AI of encouraging her 14-year-old son, Sewell Setzer, to commit suicide. She also claims the app engaged her son in “abusive and sexual interactions”.

The lawsuit said Sewell had a final conversation with the chatbot before taking his life in February.

While the company said it had implemented new safety measures in recent months, many have questioned if these guard rails are enough to protect young users.

According to Peter Chan – the founder of Treehole HK, which has made a mental health AI chatbot app – platforms like Character.AI can easily cause mental distress or unhealthy dependency for users.

“Vendors have to be very responsible in terms of what messages they are trying to communicate,” he said, adding that chatbots should remove sexual content and lead users to support if they mention suicide or self-harm.

Chan noted that many personalised AI chatbots were designed to draw people in. This addictive element can exacerbate feelings such as loneliness, especially in children, causing some users to depend on the chatbots.

“The real danger lies in some people who treat AI as a substitute of real companionship,” he said.

Chan warned that youngsters should be concerned if they feel like they cannot stop using AI. “If the withdrawal feels painful instead of inconvenient, then I would say it’s a [warning] sign,” he said.

Allure of an AI partner

When Lorraine was considering whether to have an AI partner a few years ago, she was going through a period of loneliness.

“As soon as I grew out of that lonely phase, I thought [it was] a really ‘cringe’ thing because I knew they weren’t real,” she said. “I’m not worried about my use of AI [any more] because I am fully aware that they are not real no matter how hard they would convince me.”

With a few more years of experience, Lorraine said she now could see the dangers of companionship from AI chatbots, especially for younger children, who are more susceptible to believing that the virtual world is real.

“Younger children might develop an attachment since they are not as mature,” she noted. “The child [can be] misguided and brainwashed by living too [deep] into their fantasy with their dream character.”

For people who feel lonely, Chan urged them to lean into real-life experiences, rather than filling that hole with an AI companion.

“Sometimes, this is what you need – to live to the fullest. That’s the human experience. That’s what our life has to offer us,” he said, suggesting people spend time with friends or find events to meet potential dates.

For anyone who thinks they are dependent on AI chatbots, Chan reminded them to be compassionate with themselves. He recommended seeking counselling, finding interest groups and reframing AI to become a tool rather than a crutch.

He also encouraged teens not to judge their friends who have an AI boyfriend or girlfriend, and noted that they should invite their friends to do activities together.

Your Voice: Florida mother sues Character.AI

Use AI chatbots to your advantage

With the proper safety mechanisms, Chan believes AI chatbots can be helpful tools to enhance our knowledge.

He suggested using these platforms to gain perspective on different issues from world leaders or historians – though their responses should be fact-checked since AI chatbots can sometimes create false information.

While the psychologist warned against using AI chatbots to replace real-world interactions, he recommended it as a stepping stone for people with social anxiety, who could build their confidence by using the bots to have practice conversations.

“AI presents a temporary safe haven for that ... You won’t be judged,” Chan said.

While AI tools have a long way to go in protecting users, Lorraine said she enjoyed using them for her entertainment, and Chan noted the technology’s potential.

“AI is not an enemy of humanity,” he said. “But it has to go hand in hand with human interactions.”

If you have suicidal thoughts or know someone who is experiencing them, help is available.

In Hong Kong, you can dial 18111 for the government-run Mental Health Support Hotline. You can also call +852 2896 0000 for The Samaritans or +852 2382 0000 for Suicide Prevention Services.

In the US, call or text 988 or chat at 988lifeline.org for the 988 Suicide & Crisis Lifeline. For a list of other nations’ helplines, see this page.

Stop and think: What do personalised AI chatbots do? Why might they be addicting for some people?

Why this story matters: Amid the rapid development of artificial intelligence, some believe companies should safeguard users from becoming dependent on personalised chatbots for companionship.

distress 痛苦

emotional suffering or anxiety

exacerbate 加劇

to make a situation or problem worse

haven 安全地

a place of safety or refuge

queries 查詢

requests for information

susceptible 易受影響的

being easily influenced or harmed by something

withdrawal 戒癮

the period of time when somebody is getting used to not doing something they have become addicted to, and the unpleasant effects of doing this