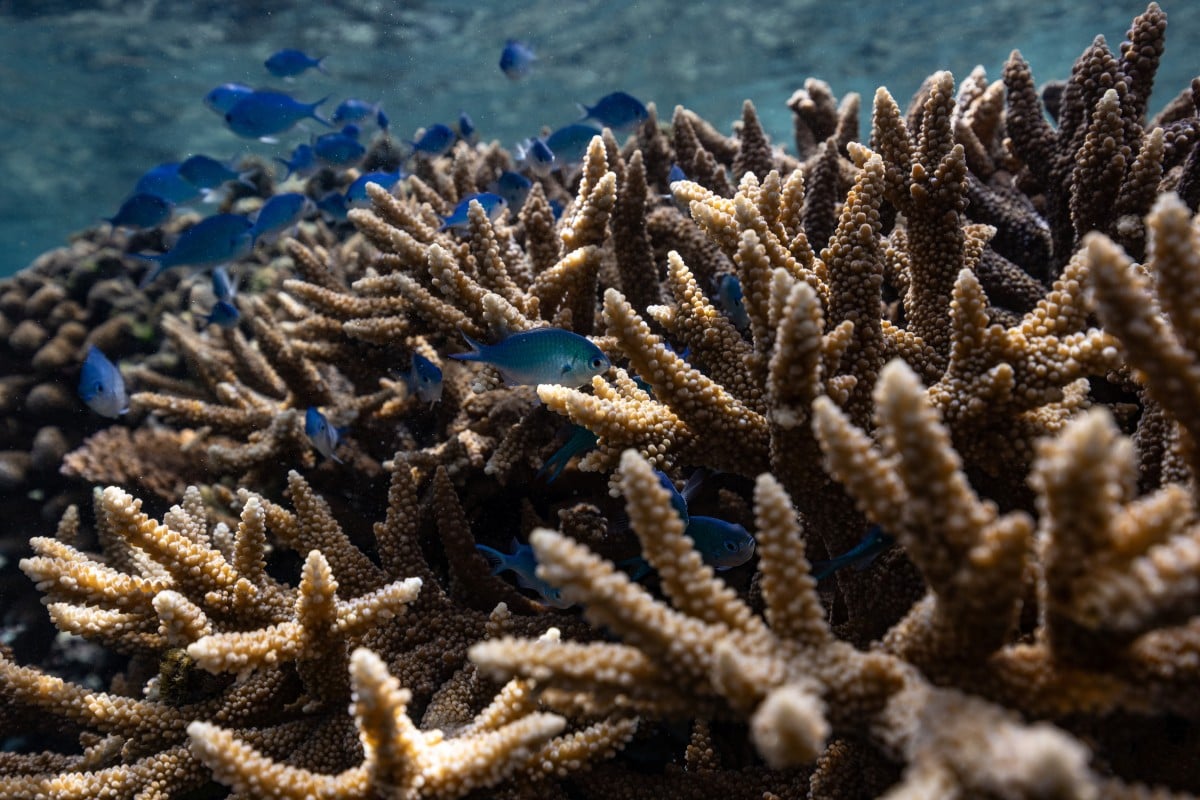

What does dying coral reef sound like? AI is developing an answer. Photo: Reuters

What does dying coral reef sound like? AI is developing an answer. Photo: ReutersBen Williams sits quietly in his London apartment, waiting for a very specific sound to emerge from his headphones. He’ll know it when he hears it: Williams says the short, percussive bang “can shake you out of your skin.”

Williams is a PhD student in marine ecology at University College London. He is listening to underwater recordings captured in the Indo-Pacific for acoustic evidence of blast fishing. Blast fishing is a destructive practice that uses explosives to kill or stun fish. His findings are critical to Google DeepMind – where Williams is a student researcher – which is using them to train an AI tool known as SurfPerch.

Audio data has long been used by ecologists to identify potential threats such as poaching and blast fishing. They use it to assess animal populations and to evaluate ecosystem health. But computers can do it faster. Where it might take weeks for a human to sift through 40 hours of audio recordings, Williams says SurfPerch will be able to do the same within seconds – at least once it’s properly trained up.

“It can increase the amount of data we can work with by orders of magnitude,” he says.

Today, SurfPerch can identify 38 different marine sounds, including the clicks of dolphins and the whooping of Ambon damselfish. The tool is being trained on audio collected by scientists all over the world. This includes data from Google’s “Calling in Our Corals” programme, which uses human volunteers and marine ecologists to identify ocean sounds. The ultimate goal for SurfPerch, says Williams and Clare Brooks, programme manager at Google Arts & Culture, is to leverage artificial intelligence to accelerate nature conservation.

Hong Kong scientists warn of more severe bleaching of coral reefs

Google isn’t alone in seeing that potential. As pollution, deforestation and climate change shrink wildlife habitats, species are disappearing up to 10,000 times faster than the natural extinction rate. There is a growing need to understand biodiverse ecosystems, and deploying AI to augment acoustic monitoring is gaining steam. AI has already been used to track elephants in the Congo and to decipher bat-speak.

Companies are also interested in biodiversity intelligence, says Conrad Young, founder of Chirrup.ai. Since its launch in 2022, the London-based start-up has conducted AI-enabled bird monitoring for more than 80 farms in the UK and Ireland. Chirrup collects 14 days of acoustic data from a location, then uses its algorithm to count the number of species and name them. The greater the quantity of species present, the healthier the environment.

“Biodiversity is an important risk and opportunity in the supply chain,” Young says. Farms with solid track records could potentially be rewarded with government subsidies, and companies face growing regulatory pressure to reduce their environmental footprint. France already includes biodiversity risks in its mandatory disclosures for financial institutions, and more countries could follow suit: In 2022, 195 nations inked a deal to protect and restore at least 30 per cent of the Earth’s land and water by 2030.

The companies using AI to “eavesdrop” on biodiversity say it’s faster and cheaper than humans, and much easier to implement at scale. But accuracy is still a work in progress. Chirrup, for example, assigns an ornithologist to sample its algorithm’s conclusions and rate the level of confidence for each species identified.

“The truth is none of [these tools] work really well yet,” says Matthew McKown, co-founder of Santa Cruz, California-based Conservation Metrics. His company started using AI to process large sets of bioacoustic data in 2014, and last year contributed to roughly 100 conservation projects.

McKown says an algorithm is only as good as its training data, and there simply isn’t enough acoustic data out there when it comes to rare animals. The challenge is bigger in the tropics, home to many biodiversity hotspots but few acoustic sensors. Some species are also harder to pinpoint. Bitterns, for example, have a call that sounds similar to a tractor engine turning over.

Underwater acoustics are even more complex, says Tom Denton, SurfPerch’s lead software engineer. Most species of fish, sharks and rays produce low-pitched sounds that are difficult to pick up, while noises from human activities such as offshore drilling travel long distances and can drown out wildlife.

And while AI can help evaluate ecosystems, there’s still some question as to how the data might be used to bolster conservation. Unlike climate change mitigation, which centres on reducing greenhouse gas emissions in the atmosphere, there is no easy shared metric for biodiversity protection. Even the “30 per cent by 2030” goal leaves plenty of room for interpretation.

Williams at Google DeepMind agrees that leveraging AI for conservation is still “in its early days.” But initial results show some promise. Training an algorithm to identify blast fishing, for example, helps build tangible evidence of a practice that is illegal in many countries but still relatively common. That, in turn, helps make a case for law enforcement to step up sea patrol.

SurfPerch also monitors coral reefs, crucial marine ecosystems that underpin an estimated US$2.7 trillion a year of goods and services. There, the AI tool gets around practical limitations by focusing less on individual species and more on a reef’s “soundscape” – sounds produced collectively by all of the species living on it. AI is learning to distinguish the soundscapes of degraded reefs from healthy ones, a framework that could be used to evaluate restoration efforts or to determine when commercial fishing bans are needed.