Wilczek’s Multiverse | From physics to mind: where AI really came from

Despite grumbles in the scientific community, the Nobel Prize for Physics going to artificial intelligence pioneers was inspired

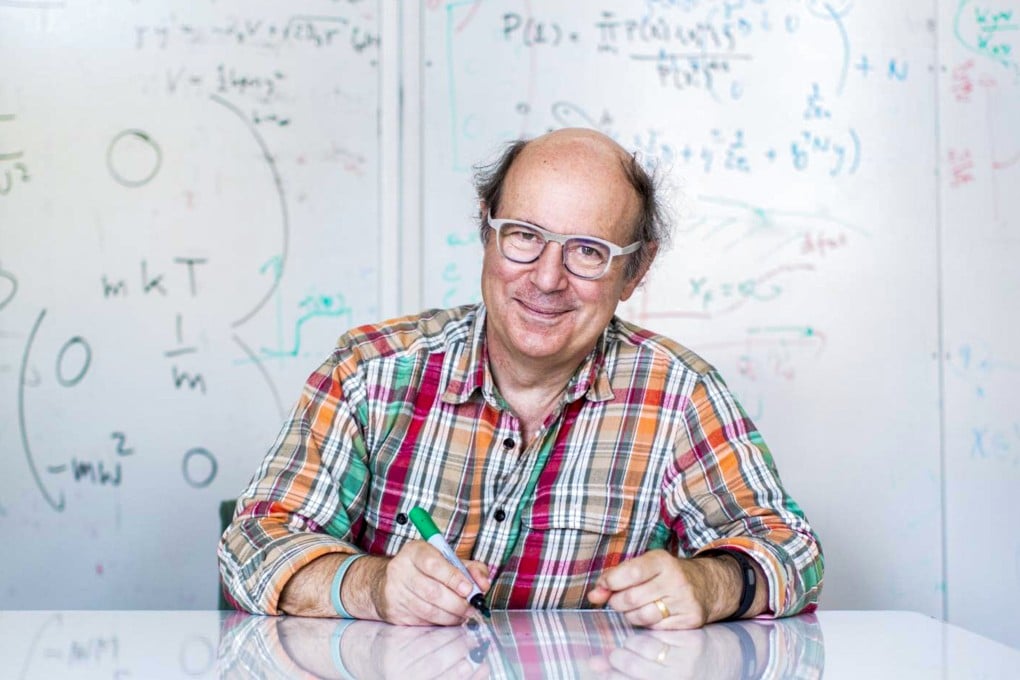

Today, American theoretical physicist and Nobel laureate Frank Wilczek launches the first in a series of monthly columns written exclusively for the South China Morning Post. Here, he reflects on the significance of this year’s physics Nobel Prize and its implications for future work in artificial neural networks.

Biological neurons come in many varieties and can be fiendishly complex, but Warren McCulloch and Walter Pitts, seeking to understand the essence of how they empower thought, defined – drastically – idealised caricature neurons with mathematically convenient properties. Their model “neurons” respond to input consisting of one or more “pulses” – which may come from multiple sources – by outputting, when the total input is large enough, pulses of their own. Such neurons can be wired together to make functional networks. These networks can transform streams of input pulses into streams of output pulses, with processing by intermediate neurons in between.

McCulloch and Pitts showed that their artificial neural networks could do all the basic operations of logical processing needed for universal computation.

McCulloch and Pitts’ work attracted the attention and admiration of several of the great pioneers of modern computing, including Alan Turing, Claude Shannon and John von Neumann. But mainstream, practical computing went in a different direction. In it, the basic logical operations were directly in simple transistor circuits and orchestrated using explicit instructions, i.e. programs. That approach has been wildly successful, of course. It brought us the brave new cyberworld we live in today.

But artificial neural networks were not entirely forgotten. Though such networks provide unnecessarily complicated and clumsy ways to do logic, they have a big potential advantage over standard transistor circuits. That is, they can change gracefully. Specifically, we can change the input-output rule for a neuron by adjusting the relative importance – technically, “weight” – we assign to input from different channels.