Advertisement

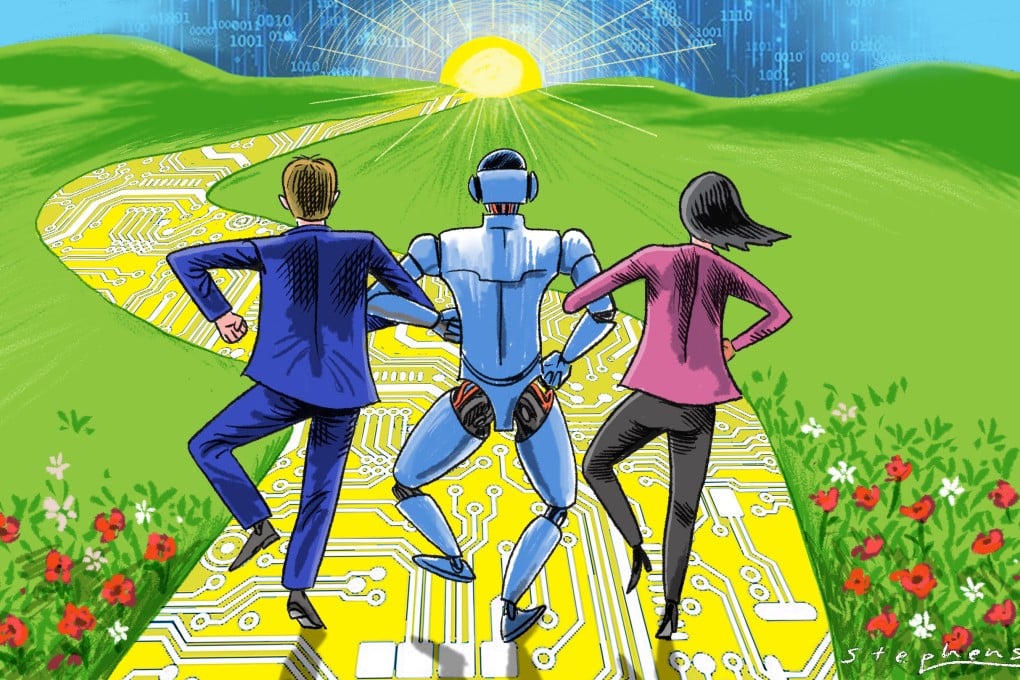

Opinion | Fears over AI dangers are hindering the chance to create a better future

- Given the rapid evolution of AI and the diverse governance approaches worldwide, we should seek adaptable cooperation that finds broad common ground

- The focus on AI’s threats mean we could miss an opportunity to forge a future where we coexist with technology in a world of grace and justice

Reading Time:4 minutes

Why you can trust SCMP

2

Last week, a breath of optimism swept through the news as the United States and China made headlines for encouraging reasons. The highly anticipated meeting between President Xi Jinping and US President Joe Biden concluded with both nations expressing a shared understanding across a broad spectrum of interests. This included not only traditional sectors such as the economy, trade and agriculture but also burgeoning fields such as artificial intelligence.

The discussion on AI seems to resonate with the appeal for intergovernmental cooperation that Henry Kissinger and Graham Allison proposed last month. Echoing those sentiments, there were hopes the two powers would agree on AI arms control or at least a consensus to exclude AI from nuclear command systems.

The enduring peace among nuclear nations since 1945 underlines a mutual recognition of nuclear perils. AI technology has amplified these risks, making their non-use a matter of course. However, the path to effective AI governance is complex and the theory of knowledge differs vastly from practical epistemology, especially in the unpredictable theatre of war.

AI is more than just a substitute for specific human functions. Its essence is shaped by the data it processes and the complexity of its algorithms. Unlike commercial applications, nuclear weapons in warfare cannot readily amass extensive training data. The confidentiality of enemy information and the dynamic nature of warfare further complicate data acquisition.

Even the most advanced AI algorithms fall short of emulating the nuanced decision-making of an experienced general who bases decisions on a wealth of professional knowledge and real-life experience. This differs significantly from current discussions about the military application of AI, often encapsulated in the “human in the loop” concept.

Algorithms and software are very intricate, so it’s difficult to decide who should be in which loop and how they should interact. Moreover, even in a case where China and the US reach an agreement, another conundrum arises. How could we verify compliance, and would any nation permit external inspection of their nuclear launch system’s software codes?

Advertisement