Advertisement

Opinion | A short pause in development of powerful AI can ensure proper data and privacy controls in the long run

- Time is needed to develop a shared safety protocol to manage the massive data used in AI training, often pulled from the internet and which may include user conversations

- Tech companies – and AI developers in particular – have a duty to review and critically assess the implications of their AI systems on data privacy and ethics, and ensure guidelines are adhered to

Reading Time:3 minutes

Why you can trust SCMP

8

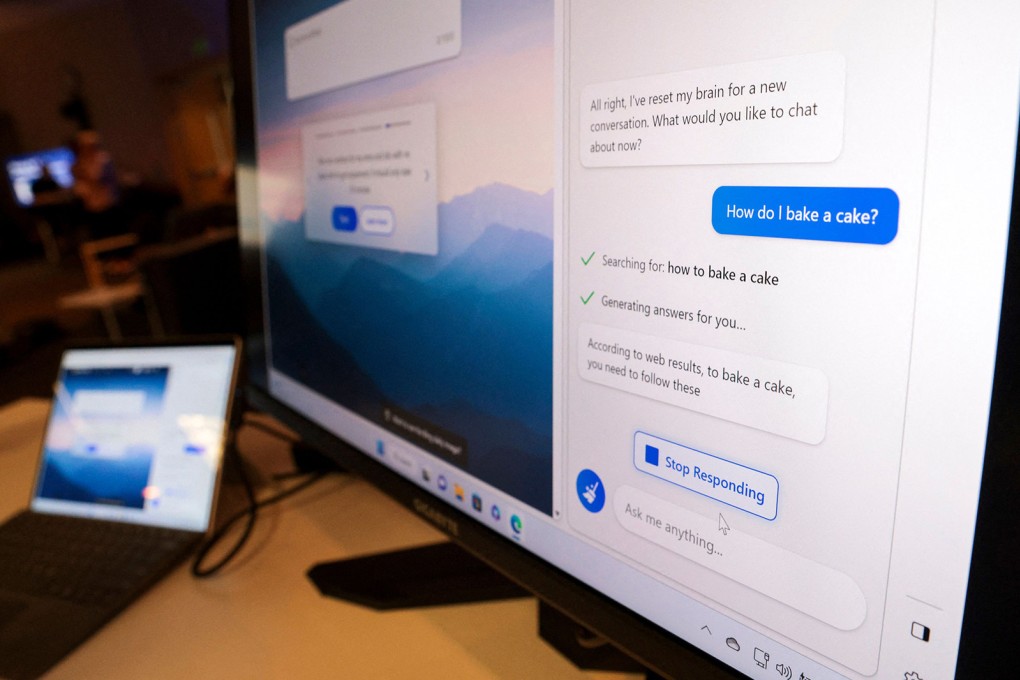

Artificial intelligence and, in particular, the rise of generative AI-powered chatbots such as Open AI’s ChatGPT, Google’s Bard, Microsoft’s Bing chat and Baidu’s Ernie Bot, has been making waves.

Advertisement

While many embrace these technological breakthroughs as a blessing for humankind, the privacy and ethical implications behind the use of AI demand closer scrutiny. An open letter calling for a six-month pause on the training of AI systems more powerful than GPT-4, to allow for the development and implementation of shared safety protocols, has swiftly garnered more than 26,000 signatories.

According to McKinsey & Co, generative AI describes algorithms “that can be used to create new content, including audio, code, images, text, simulations and videos”. Unlike earlier forms of AI that focus on automation or primarily conduct decision-making by analysing big data, generative AI has a seemingly magical ability to respond to almost any request in a split second, often giving a creative, new and convincingly humanlike response – setting it apart from previous editions.

The potential of generative AI to transform many industries by increasing efficiency and uncovering novel insights is immense. Several tech giants have already started to explore how to implement generative AI models in their productivity software.

In particular, general-knowledge AI chatbots based on large language models, like ChatGPT, can help draft documents, create personalised content, respond to employee inquiries and more. One of the world’s biggest investment banks has reportedly “fine-tune trained” a generative AI model to answer questions from its financial advisers on its investment recommendations.

Advertisement

But looking at generative AI through rose-coloured spectacles reveals just one side of the story. The use of AI chatbots and their output also generates privacy concerns.

Advertisement